Table of Contents

Current Landscape

As I write this article, technology companies find themselves in a transitional phase. With the advent of artificial intelligence, the way software is produced will change, and so will the type of professionals required to build it—if this transformation hasn’t already begun.

In the IT field, the most common professional roles include: architects and developers, quality assurance (QA) specialists, infrastructure engineers, database analysts, and all related variants of these specialties.

Architects and developers design and implement all product-related code.

QA specialists ensure that products meet quality requirements.

Infrastructure engineers manage company infrastructure, including servers, databases, cloud automation, and much more.

Database analysts handle databases, including schema design, data migration, and related tasks.

Obviously, there are other roles of a more administrative nature, but we won’t focus on them in this analysis.

The fundamental issue is that AI is redefining how the industry creates its products, with software being the first and most significantly affected sector. Software engineers, who until recently were highly sought after for their ability to produce code and deploy it to production, performed their work in a predominantly artisanal manner. Although good practices and processes have always been followed, most existing software has been designed and programmed by human minds and hands.

Today, Large Language Models (LLMs) have surpassed previous AI models (such as BERT) and have established themselves as the most powerful tool for the software industry.

Additionally, generative AI techniques such as vibe coding, agents, and MCP (Model Context Protocol) servers, when combined, could potentially replace a considerable portion of the traditional development work that currently dominates the software industry.

Consequently, technology companies, especially the largest ones, have begun replacing traditional development engineers with AI-specialized engineers.

What’s the Difference Between Traditional Development and AI-Powered Development?

Simply put, it will no longer be necessary for a developer to create an API, or for a DevOps specialist to deploy a cloud solution—an agent with those specific capabilities can do it.

How Do AI Agents Work?

AI agents are systems that combine language models (LLMs) with tools and execution capabilities. Unlike a simple chatbot, an agent can:

- Reason about what actions to take

- Execute tools and commands

- Observe the results

- Iterate until completing the task

Here’s a small example flow of deployment using an AI agent.

Key System Components

- LLM (Language Model): The “brain” that reasons and makes decisions

- Agent: Orchestrator that coordinates between the LLM and tools

- Tools: APIs, CLI commands, file access, databases, etc.

- Environment: The actual system where actions are executed

Workflow

- User Input: Defines the high-level task

- Reasoning: The LLM analyzes which tools to use and in what order

- Execution: The agent invokes the necessary tools

- Observation: Receives the results of each action

- Iteration: The LLM decides the next step based on results

- Completion: When the task is finished, reports to the user

This cycle of Reason → Act → Observe repeats until the agent completes the task or determines it cannot continue.

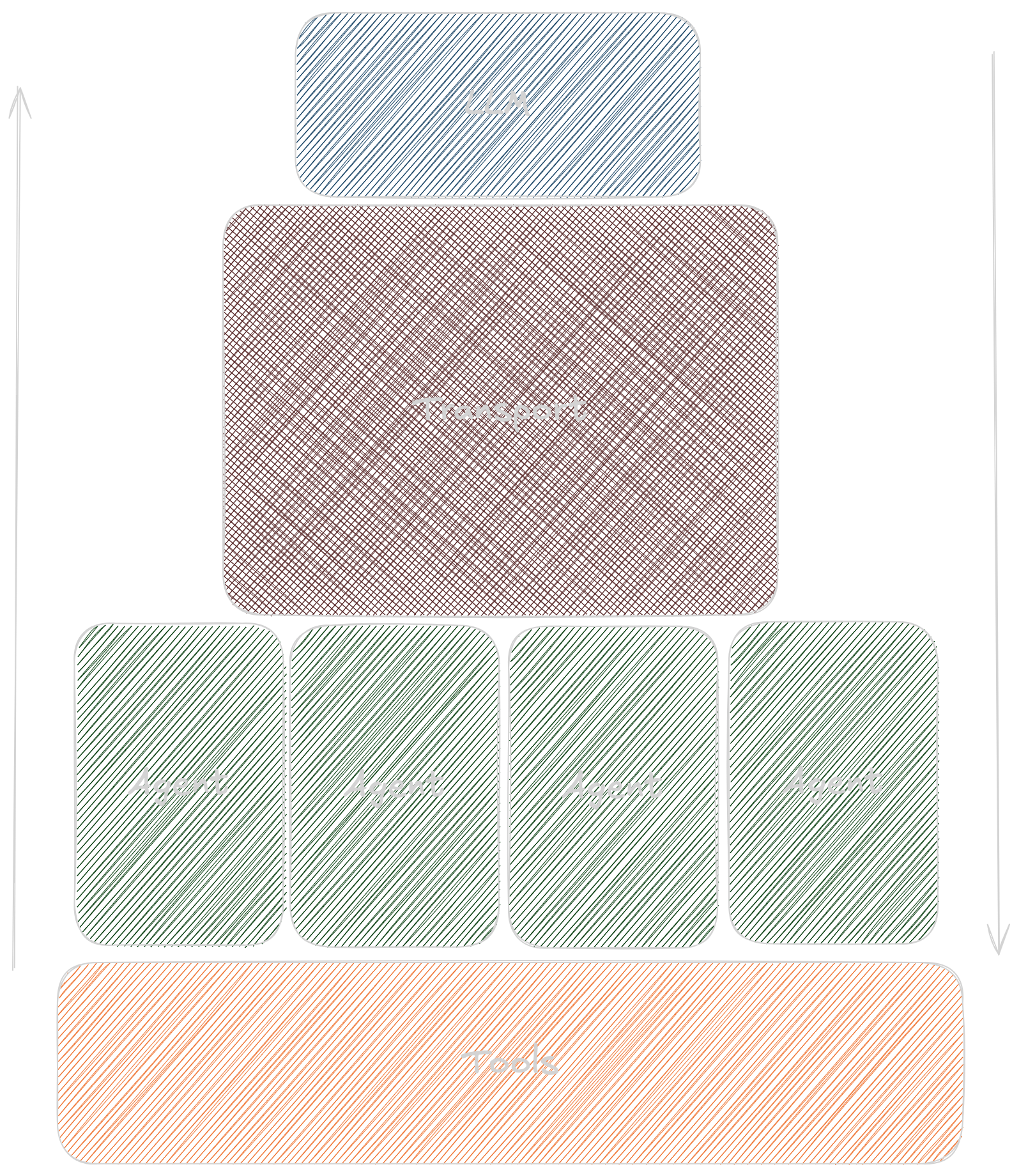

The image below illustrates what I call the “tower of abstraction.” As with any process, there’s a natural evolution where components become progressively more specialized and their interactions become more concrete and optimized.

Consider this example: the human brain doesn’t directly “know” how to make the hand grip an object. It simply decides that a situation requires gripping and sends the signal to the specialized organ—in this case, the hand muscles. In the image, we can establish the following analogy: the LLM represents the brain, transport symbolizes the nerves, and each agent corresponds to a specific hand muscle.

Similarly, in the software world, the process evolved from implementing a single monolithic module to executing specific tasks in microservices orchestrated by a controller. This controller decides which microservice should be invoked in response to an event or condition. Applying this analogy to the image, the LLM corresponds to a Control Plane, transport to networking and routes, and agents to Pods/containers.

In this evolution, the AI agent determines which tools to use and in what sequence, while the LLM decides the next step based on the obtained results.

The next step in traditional development turned out to be perhaps more unexpected than logical. This evolution in the software industry (and many others) translates into delegating development, implementation, testing, and deployment work to intelligent agents. These can perform such work continuously and with superior efficiency compared to humans, consuming fewer resources and thus leaving behind the artisanal aspect of the process.

So What Will Happen to…?

Yes, the crucial question: what fate awaits traditional engineers who, day after day, deliver task after task?

Two clear alternatives present themselves: either we let events overwhelm us and the “tsunami” buries us under tons of water, or we reinvent and adapt ourselves (once again), learning to navigate the vast sea of changes ahead.

From now on, the industry will no longer require developers who write code from start to finish, engineers who deploy complete solutions, or analysts who exhaustively review code or data structures. Instead, the industry will demand engineers capable of orchestrating agents offered by other providers, as well as creating their own agents for their business’s specific use cases.

Work previously performed by teams of dozens will now be carried out by just a few, who will nevertheless produce the same amount or even more than those “small armies.”

Since creating products will be much simpler, numerous startups will likely emerge that, despite having few people, will achieve significant impact and establish themselves in the market. Additionally, many new positions will be created for those who learn to interact with and improve agents.

Education must undoubtedly transform as well, especially higher education, which still trains engineers with a “classical” profile who will enter a market that no longer needs them. Higher education institutions must reform their curricula urgently!

Similarly, software companies that intend to retain their engineers must encourage them to update their skills and make this transition as smoothly as possible. As engineers, we take pride in our capabilities, but we sometimes show stubbornness and reluctance, or even disbelief, in the face of change, especially when it doesn’t seem to favor us.

Where to Begin?

As a leader of an AWS User Group, one of my duties is to guide the community toward this future.

There’s no more powerful tool than education. Therefore, my first recommendation would be to pursue certifications related to this topic, created and designed by the very companies leading this transition. Personally, I believe there’s no better incentive to study than a certification, as the knowledge it requires is not only theoretical but also encompasses practical day-to-day mastery; consequently, those without that experience will be compelled to acquire it on their own.

The first and most important certification to begin this journey would be the “ AWS Certified AI Practitioner ,” which covers the fundamentals: what an LLM is, what a vector database is, what an agent is, how to create effective prompts to interact with an AI agent, among other aspects. Since it’s an AWS certification, it requires at least basic knowledge of many of its services and their minimum configuration. Therefore, reviewing the material for the “ AWS Certified Cloud Practitioner ” certification is also very beneficial; and of course, if you’re motivated to obtain it, all the better.

Once you’ve obtained the first certification, you can begin creating AI agents and thus gradually advance in the transition.

The first option is a fully managed service called Amazon Bedrock , which integrates numerous LLMs and offers tools for creating agents, integrating models, etc. Here you can find an exhaustive list of articles related to Amazon Bedrock.

AWS also offers an open-source framework called Strands Agents , code-oriented (currently in Python) and easy to use. Its GitHub repository contains excellent examples to get started, particularly highlighting the personal assistant :

This example employs several integrated agents, such as those for information search, image search, and video search, among others; as well as a main agent responsible for answering user questions. It constitutes a very didactic example for understanding Strands’ capabilities in creating agents.

Once you’ve acquired the necessary experience, you could pursue the “ AWS Certified AI Developer Professional ” certification, which, at the time of writing this article, is in beta phase. Upon obtaining this professional-level certification, you’ll be able to demonstrate proficiency in developing applications with and oriented toward AI.

Although this certification, despite being professional-level, doesn’t require any prerequisites for taking it, community sentiment suggests that the following are ideal certifications as preparation:

Time will tell if this is the case.

Conclusion

If there’s one certainty in this life, it’s that everything changes. Therefore, we must all be prepared for the changes that will inevitably come.

Personally, I’ve begun my own transition journey and must confess that I’m enjoying it immensely. I’ve always been a “Developer Wannabe” who hadn’t found the time or courage to realize my aspirations. However, now I can do so without needing to invest so much time delving deeply into programming, and with the ability to create functional applications in significantly less time. I know my esteemed developer colleagues criticize vibe coding vehemently, but it’s imperative to shed prejudices, relearn, and open our minds, because this change, which has already begun, won’t stop, no matter who it displeases.

Random Recommended Posts

Keyboard Shortcuts

| Command | Function |

|---|---|

| ? (Shift+/) | Bring up this help modal |

| g+h | Go to Home |

| g+p | Go to Posts |

| g+e | Open Editor page on GitHub in a new tab |

| g+s | Open Source page on GitHub in a new tab |

| r | Reload page |